Difference between revisions of "Hardware"

From DHVLab

Wiki admin (talk | contribs) |

Wiki admin (talk | contribs) m (Wiki admin moved page Hardware to Architektur:Hardware) |

(No difference)

| |

Revision as of 13:34, 8 September 2016

Contents

Server Hardware

OVirt Engine Cluster

High available Linux Cluster for hosting the OVirt Engine inside a KVM

2x

HP ProLiant DL360 G5 Rack Mount Chassis

2 GHz Quad Core 64Bit Intel® Xeon®, 12 MB L2 Cache

32GB Memory

QLogic ISP2432-based 4Gb Fibre Channel

3xBroadcom Corporation NetXtreme II BCM5708 Gigabit Ethernet

80Gb HDDOVirt Nodes

Nodes for hosting virtual machines. There are currently two nodes, but to scale the platform

new nodes can be added easily.

2x

HP ProLiant DL360 Gen9 Rack Mount Chassis

2.3GHz 10x Core 64Bit Intel® Xeon® Processor E5-2650 v3 25M Cache

32GB Memory

2x QLogic ISP2432-based 4Gb Fibre Channel

4xBroadcom Corporation NetXtreme BCM5719 Gigabit EthernetFibre Channel Setup

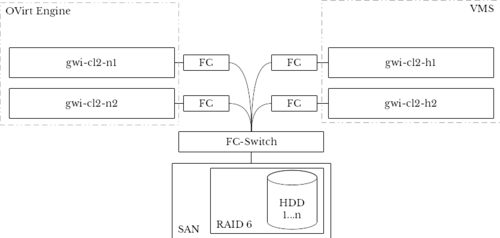

Shared storage, e.g. for backups, or VM images, is located on a SAN that is attached via FibreChannel. The SAN has several spare disks to recover disk failure. The SAN combines multiple physical disk drives into a single logical RAID-6 unit for the purposes of data redundancy and performance improvement.

Physical Network Setup

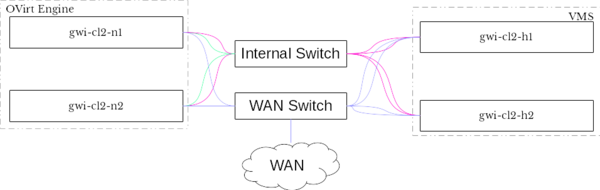

As the OVirt Engine cluster has 3 network ports, we use one for WAN access, one for the cluster communication and one for the network that connects all nodes of the oVirt setup for command&control.

At the oVirt nodes two balance-xor bonds are created - containing 2 adapters each - that take care of load balancing and redundancy. One is used for WAN communication, the other for VM communication.

Green: Cluster Lan, Red: VM Network, Blue: WAN

Each host has a seperate ILO port that is connected to the internal network.